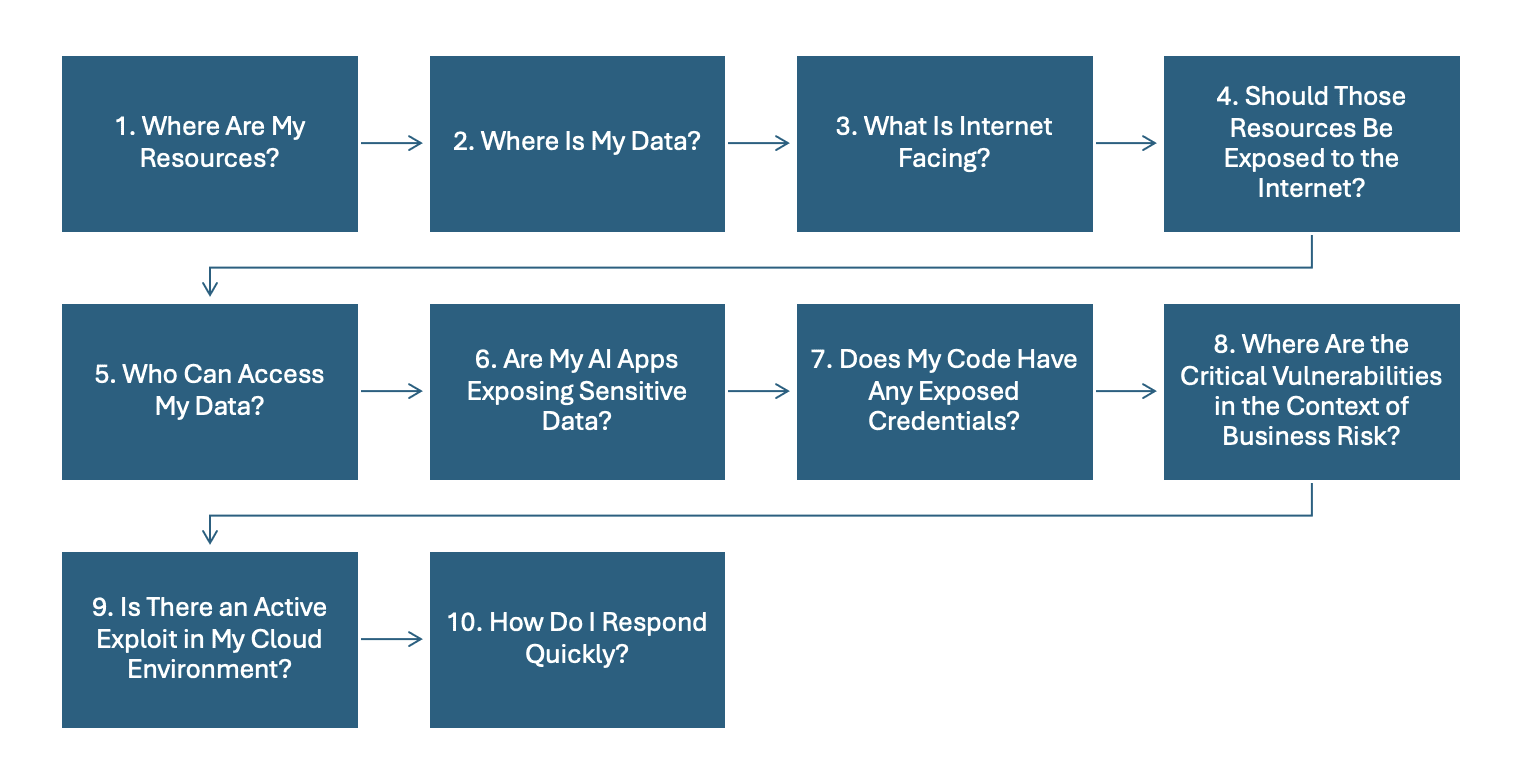

Top 10 Strategic Questions in Cloud Security

Uncertainty in the cloud demands attention. This article presents a set of strategic questions that cut through the noise and guide discovery for securing your cloud environments.

Executives and business leaders often assume that once systems are deployed to the cloud, they are secure. That is, until an incident exposes security gaps no one knew existed.

Cloud architectures are constantly evolving. Workloads are spun up and down, infrastructure is scaled out and in, and services are added, expanded, restricted, and terminated, often in response to automated events and triggers; new services and features are released daily.

Without effective visibility and centralized oversight, significant sprawl and blind spots can develop. Below is a structured set of questions designed to uncover blind spots that create real operational and security risk.

1. Where Are My Resources?

Organizations rarely maintain a continuously accurate cloud inventory.

Key Considerations:

Implementing a system for change detection across cloud infrastructure is critical. Focus should be placed on identifying the cloud provider, partition (commercial, government, top secret, etc.), region, zone, and tenancy where resource changes occur.

- Inventory drift across AWS, Azure, and OCI is a common challenge. Automated infrastructure provisioning systems, such as infrastructure as code and configuration management, are effective tools for mitigating drift.

- Shadow IT workloads and untracked subscriptions occur when resources are provisioned by individuals outside of standard processes. Users should be educated on provisioning procedures, and cloud platforms should be monitored for new accounts and resources created by users with email addresses associated with your domain.

- Orphaned assets that consume costs and expand the attack surface are often overlooked, leaving them vulnerable. To address this, track resource usage, identify unused resources over a defined period, and automate notifications, and potentially termination, based on their lifecycle and usage.

2. Where Is My Data?

Data location dictates security, compliance, and governance obligations.

Key Considerations:

Proper cloud resource governance services and systems do more than identify where cloud resources have been provisioned. They also enforce guardrails that prevent data from being stored in unintended locations.

- Data sprawl is a problem that must be actively managed. Like cloud services and resources, the data stored within workloads and the data generated by those workloads must be controlled and governed. The cloud provides a variety of storage types, including object storage, file storage, volume storage, databases, queues and messages, and code repositories. Each storage type requires a tailored monitoring approach.

- Sensitive data stored outside approved boundaries is a common challenge. Data should be classified (tagged) as it is ingested into the cloud environment. Once classified, automation tools can manage and govern it appropriately. Unclassified data should be treated with the highest level of sensitivity.

- Replication or backup data stored outside intended regions can occur if redundant backups are not carefully configured. While replication and redundancy are essential for disaster recovery and business continuity, storing data in multiple locations increases exposure and must be managed carefully for compliance.

3. What Is Internet Facing?

You cannot defend what you do not know is exposed.

Key Considerations:

It is critical to know whether web services and APIs are public-facing or internal. The public footprint should be minimized and protected with the highest level of security, including firewalls and encryption. Both internal and external traffic require proper inventory and testing, but services exposed to the Internet demand the greatest attention, protection, and rigorous testing.

- Common publicly facing endpoints include public load balancers, API gateways, CDN origins, storage access gateways, network ingress points, and application firewalls. All major cloud providers offer native protections for these ingress services, which can help secure them effectively.

- Unintended public buckets, serverless endpoints, and development environments are frequently found in enterprise cloud deployments. Regular testing and monitoring are required to ensure these unintended access points do not appear.

- Administrative interfaces and management ports are among the highest-risk endpoints. These interfaces should remain private, never exposed to the Internet, and should require VPN access for authorized personnel only.

4. Should Those Resources Be Exposed to the Internet?

Exposure alone isn’t the problem; unnecessary exposure is.

Key Considerations:

Maintaining an inventory of publicly accessible resources is not sufficient. It is also critical to understand which services operate behind these endpoints, what data and functionality are exposed, who is responsible for managing them, and who the users are along with their business needs. Without this understanding, it is impossible to determine whether the level of exposure is justified for legitimate business purposes.

- Business justifications for public exposure should be formally documented and approved. This ensures stakeholders are aware of the risks and have explicitly accepted them.

- The viability of private endpoints or service meshes should be evaluated as alternatives. Each justification should include an assessment of potential alternatives to confirm that any accepted high risk cannot be reduced or mitigated through other technologies.

- Segmentation and network access control alignment are essential for understanding the current network topology and the controls protecting both north-south and east-west traffic. Without a clear view of the topology, designing effective access control policies between segments is not possible.

5. Who Can Access My Data?

Identity is the new perimeter.

Key Considerations:

Data is an organization’s most valuable asset aside from its people. Once data mapping and classification controls are established, the next step is ensuring that access rights align with the sensitivity and intended handling of that data. Effective access governance ensures only authorized identities, human or machine, can reach the data appropriate for their roles.

- Over-provisioned IAM roles and policies are extremely common in modern cloud environments. Many organizations operate hundreds or thousands of roles governing tens of thousands of granular service permissions. The sheer scale makes manual review unsustainable, requiring automation, aggregation tools, and continuous monitoring to manage privileges effectively and prevent unnecessary access.

- Lateral movement paths across tenants remain difficult to identify and control. Cross-tenancy roles can be valuable for operational efficiency, enabling workloads in one tenant to interact with resources in another. However, once such trust relationships are created, visibility often diminishes. It becomes challenging to track which identities are assuming these roles, how often they are used, and whether their permissions remain justified.

- Contractors, service accounts, machine identities, and federated identities require especially careful governance. These identities are frequently granted access rapidly to meet operational demands, sometimes without sufficient evaluation of the privileges assigned. Without clear ownership, lifecycle management, and strict access boundaries, these identities can accumulate excessive permissions and introduce significant long-term risk.

6. Are My AI Apps Exposing Sensitive Data?

AI adoption is outpacing governance.

Key Considerations:

AI systems are often implemented first and secured second. This is common with innovative technologies and organizations, but the broad and often sensitive data access granted to AI introduces new vulnerabilities and expands the potential for unintended privileged access.

- A foundational step in securing any technology is understanding what assets and workflows require protection. AI implementations often begin as shadow IT, but once integrated into enterprise environments, they require formal discovery, workload identification, and documentation. By identifying which workloads incorporate AI, along with mapping data flows into and out of these components, organizations can determine where safeguards must be implemented and what controls are most appropriate.

- One of the most common first lines of defense is the use of input and output filters. Input filters prevent users from submitting restricted words, phrases, or prompt patterns. Output filters restrict what information an AI model is permitted to return, ensuring sensitive or unintended data is not exposed.

- Observability and visibility are crucial. All prompts, inputs, and outputs must be securely logged to support incident analysis and forensic investigations. If prompt-injection or abuse attempts occur, logs must provide sufficient detail to analyze attack patterns, and automated responses may be necessary to block future activity from offending users or compromised accounts. As with any sensitive logging system, prompt and output records must be protected, encrypted, and access-controlled.

- For organizations fine-tuning models, training data must be thoroughly sanitized. It should be free of malware, harmful commands, or unsafe content. Fine-tuning datasets require the same level of protection expected of any sensitive enterprise data: validated, access-controlled, and governed by strict data-handling standards.

- AI systems continue to grow rapidly in their capabilities, integration points, and architectural complexity. AI agents in particular are becoming more powerful through enhanced models, multi-step reasoning, and access to tools and systems via technologies such as MCP and RAG. These tools must be tightly governed to ensure AI agents are not granted access to systems, privileges, or actions beyond what the organization explicitly intends.

7. Does My Code Have Any Exposed Credentials?

Secrets exposure is a leading cause of cloud compromise.

Key Considerations:

Effective secrets management remains one of the most persistent challenges for application development and operations teams. The most common risk involves static credentials embedded within applications, often found in Git repositories, Docker images, configuration files, and server backups. Mitigating these issues begins with a comprehensive understanding of each application, the location where secrets are stored, and how the secrets are used.

- Scanning CI/CD pipelines for hard-coded keys and tokens is an important practice. This helps validate the integrity of the deployment architecture and ensures that any credentials used during deployment rely on short-lived identities rather than static, embedded secrets within the pipeline itself.

- Implementing rotation policies for cloud access keys is also critical. Major cloud providers offer native tools that track credential age and last-used timestamps, making it easier to identify keys that should be rotated or removed.

- Finally, the use of centralized secrets managers and environment-based injection is the modern and preferred approach to credential handling. Applications and the resources that host them can authenticate using identity-based access controls, with short-lived tokens supplied through environment variables. These temporary credentials reduce exposure and limit the impact of any accidental disclosure.

8. Where Are the Critical Vulnerabilities in the Context of Business Risk?

CVEs matter and context determines urgency.

Key Considerations:

A proper security testing program is essential for your cloud environment. It should include routine assessments and extend beyond automated vulnerability scanning. Automated tools often produce large volumes of low-value findings, making it difficult to focus on what is truly critical.

- Vulnerabilities discovered on externally exposed workloads must be triaged with a high level of urgency. Publicly exposed workloads will inevitably be targeted by attackers. Promptly patching known vulnerabilities on public endpoints is essential, as cybercriminals routinely use automated scanners to identify exploitable systems.

- Privilege escalation paths enabled by misconfigurations can grant unintended access to identities. Configuration management and automated privilege monitoring are effective tools for identifying and preventing these risks.

- Dependencies and package-level risks represent supply chain vulnerabilities. Modern software relies on numerous components, often including open-source libraries or Docker images. Each component must be inventoried and monitored so that newly disclosed vulnerabilities can be addressed through timely patching and upgrades.

- Mapping each issue to mission impact and exploitability is critical for determining business risk. Services with high exposure and high importance to business operations require a greater level of urgency than those that are less critical or less easily exploitable.

9. Is There an Active Exploit in My Cloud Environment?

Attackers now leverage automation at scale.

Key Considerations:

It is essential to understand your cloud architecture, determine its current state, and compare it to the expected state. This concept spans multiple security domains. If the current state diverges from the expected state, that deviation may be an indicator of compromise. Alerts, and in some cases automated response actions, should trigger when these indicators appear.

- Correlation of logs across multi-cloud SIEM sources should be implemented to detect active exploits. In the cloud, all activity is driven by API calls, and properly configured environments generate logs for each call. These logs should be aggregated and analyzed through centralized log analysis and SIEM platforms to detect anomalous activity.

- Detection of anomalous IAM behavior requires monitoring and thresholds for authentication and authorization failures. Security teams should receive notifications of suspicious patterns, and automated responses may be appropriate to detect brute-force attempts or privilege escalation attempts targeting cloud identities.

- Continuous monitoring and threat intelligence mapped to your cloud architecture are critical for detecting active exploits. Understanding normal workload behavior allows you to identify anomalies and potential threats, including malware activity, command-and-control traffic, and privilege abuse.

10. How Do I Respond Quickly?

Your operational readiness determines resilience.

Key Considerations:

The same efficiency and automation that make the cloud innovative can be leveraged during incident response. A wide range of vendor-supplied and open-source tools can automate detection, response, forensics, and remediation.

- Playbooks aligned to cloud-native services and built around automation tools such as infrastructure as code and configuration management can significantly improve response time. Because cloud vendors deliver standardized services, documentation is consistent, and solutions can often be shared across teams, organizations, or open-source communities.

- Automated containment actions (e.g., isolate an instance, rotate a key, disable a role) are straightforward to implement because all cloud operations are executed through API calls. Numerous tools and technologies can respond to security events and invoke API actions for rapid containment.

- Cross-team escalation paths for cloud, security, and DevOps teams improve incident response times. Cloud adoption often shifts team topologies and role responsibilities, leading many organizations to restructure traditional infrastructure teams into service teams with interdisciplinary skill sets. Clear escalation paths help ensure coordinated response during security events.

- Real-time metrics and dashboards are frequently introduced during cloud migrations to enhance operational visibility. These dashboards also reduce decision latency and accelerate response when attacks occur.

Final Thoughts

These ten questions are designed to build situational awareness in modern cloud environments. When used as the foundation for scoping and planning cloud security initiatives, they help security leaders gain clarity on exposure, control gaps, and operational risks.

In 2025, cloud security success hinges on strong system and event visibility, disciplined configuration management, solid identity hygiene, and the ability to respond quickly when behaviors drift from expected baselines.

Our expertise spans all major cloud platforms. If you are planning a cloud migration (see our considerations for moving to the cloud), security assessment (learn what to expect from our AWS security assessment process), or require penetration testing in cloud environments (explore our cloud penetration testing services), we have the skills to protect your systems.

Ready to strengthen your AWS environment? Whether you’re preparing for compliance, addressing a recent misconfiguration, or proactively managing risk, our team is ready to help. We will provide a clear understanding of your current cloud security posture and deliver a practical roadmap to enhance it.

Learn more about common security gaps in AWS.

Schedule a free introduction call and learn how we can help you get confidence in your cloud systems.